Improve the way you make use of ZFS in your company.

Did you know you can rely on Klara engineers for anything from a ZFS performance audit to developing new ZFS features to ultimately deploying an entire storage system on ZFS?

ZFS Support ZFS DevelopmentAdditional Articles

Here are more interesting articles on ZFS that you may find useful:

- ZFS Summer Article Roundup: Smart Hardware Advice

- Designing a Storage Pool: RAIDZ, Mirrors, and Hybrid Configurations

- ZFS in Virtualization: Storage Backend for the Pros

- Disaster Recovery with ZFS: A Practical Guide

- ZFS Performance Tuning – Optimizing for your Workload

As the age of data growth and sprawl continues to evolve, the demand for high-performance, secure, and scalable data storage solutions has become more critical than ever before. However, despite the rising need, the number of available options for meeting these requirements is dwindling. As a result, businesses and organizations are facing significant challenges in selecting the right solution that fits their specific needs and budget.

Fortunately, OpenZFS is emerging as a popular and excellent choice for building the storage backbone of any high-performance computing system. OpenZFS is an advanced file system and volume manager that offers robust features such as data compression, deduplication, and checksumming. These capabilities allow organizations to store, manage, and access their data efficiently, securely, and with the highest level of integrity.

What is HPC - High Performance Computing?

High Performance Computing (HPC) refers to the use of advanced computing technologies to solve complex computational problems that require a large amount of processing power. Generally, this is accomplished by clustering together hundreds or thousands of machines to tackle a workload. All of these machines need access to storage, to pull input from, to store their intermediate data, and to store the final output.

High-performance computing (HPC) has revolutionized the way we approach complex problems, enabling researchers and scientists to analyze, simulate, and model complex phenomena that were previously deemed impossible. HPC has a wide range of applications, from physics and climate simulations to weather forecasting, protein folding, oil and gas exploration, financial modeling, machine learning, and artificial intelligence.

In the scientific community, HPC is utilized for simulating complex systems, analyzing vast amounts of data, and generating accurate predictions. For example, climate scientists rely on HPC to develop more accurate models of the earth's climate system and project future changes. Similarly, physicists use HPC to simulate the behavior of subatomic particles and understand the fundamental nature of matter.

In the business world, HPC is used for various applications, such as financial modeling, risk analysis, and forecasting. Financial institutions use HPC to analyze large volumes of data and generate insights for investment decisions, while oil and gas companies use HPC to process seismic data and improve the accuracy of exploration and drilling operations.

Regardless of the application, all HPC workloads demand large amounts of reliable storage to store and manage massive datasets. Minimizing the cost of this storage is crucial in enabling the provisioning of a greater volume of storage while keeping expenses within budgetary limits. As a result, many organizations are turning to open source storage solutions like OpenZFS, which offer robust features like data compression, deduplication, and checksumming to ensure data integrity and reduce storage costs.

What is OpenZFS

ZFS is an advanced software defined storage file system and volume manager that provides efficient and reliable storage solutions for managing massive volumes of data. It was originally developed by Sun Microsystems for their Solaris operating system but was later made open source and cross platform. Now,

OpenZFS is a trusted, successful, and critical part of the storage infrastructure of organizations and institutions of every size all around the world.

Designed to handle exceptionally large amounts of data with ease, while ensuring data integrity and reliability, ZFS does all of this while presenting a unified configuration mechanism that makes storage easier to manage. ZFS also uses a copy-on-write transactional model that ensures instant recovery from unexpected shutdowns so that your data is always protected and there is no risk of data loss due to file system inconsistencies. It also provides advanced features such as transparent data compression, high performance encryption, and instant snapshotting, which allow you to optimize your storage and protect your data from threats such as ransomware.

What really sets OpenZFS apart is its ability to scale to meet the needs of high-performance computing (HPC) environments. OpenZFS can handle massive amounts of data, from terabytes to petabytes, and it can do so with ease of management and without sacrificing performance. OpenZFS is a powerful and flexible storage solution that provides efficient and reliable storage for managing large volumes of data. It's no wonder that so many companies and organizations rely on it for their storage and data management needs!

Building Resilient, Secure Storage for HPC -

The Challenges

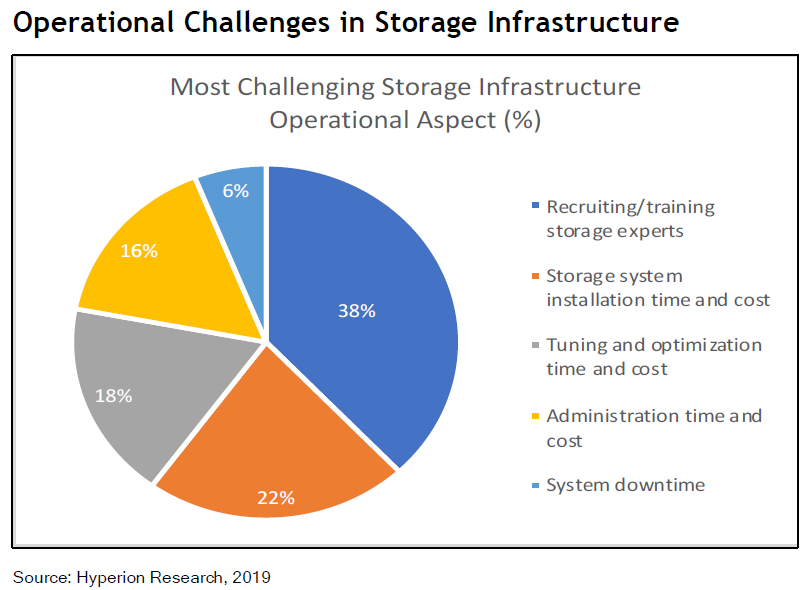

Let's first take a quick look at some of the common challenges when it comes to building overall storage for HPC.

Building storage for HPC workloads can be a complex process that involves various challenges. One of the most significant challenges is scalability, as HPC workloads generate vast amounts of data that need to be stored and managed effectively. Therefore, storage solutions must be scalable to accommodate the growing storage requirements of these workloads. Another common issue is reliability. HPC workloads often involve critical data that needs to be accessed and processed accurately and quickly. Therefore, storage solutions must be reliable and fault-tolerant to prevent data loss or corruption, which could have significant consequences for the HPC workloads and the organizations they serve.

Cost is also a significant consideration when building storage for HPC. The storage requirements of HPC workloads can be massive, and storage costs can add up quickly. Therefore, organizations need to find cost-effective solutions that balance the need for high-performance storage with budgetary constraints.

Data protection is another concern when building storage for workloads. HPC workloads often involve sensitive or confidential data that requires protection from unauthorized access or data breaches. Therefore, storage solutions must include robust security features, such as encryption and access controls, to ensure the privacy and security of the data stored.

Efficient data management is also crucial when building storage for HPC workloads. HPC workloads generate large amounts of data that need to be processed, analyzed, and stored efficiently. Therefore, storage solutions must include features like data compression and deduplication to optimize data storage and minimize storage costs.

Finally, compatibility is a significant consideration when building storage for HPC workloads. HPC systems

often run on specialized hardware and software platforms that require specific storage solutions. Therefore, organizations must ensure that their storage solutions are compatible with their HPC systems to avoid performance issues or data loss.

OpenZFS To Rescue Your HPC Cluster

Apart from the wonderful open source quality of OpenZFS, there are some punctual qualities we want to look at:

- Scalability: OpenZFS is designed to scale to massive storage systems. ZFS’s dynamic allocation model means it won’t run out of inodes or hit an artificial limit on total storage capacity. This is important in HPC environments that generate unimaginable amounts of data and create trillions and trillions of objects.

- Data Integrity: OpenZFS uses a copy-on-write architecture to prevent data corruption during a system crash and ensures data integrity using strong checksums. This is especially important for HPC environments where data loss can have serious consequences, or worse, where ingesting incorrect data could taint years of research.

- Snapshots and Clones: OpenZFS provides advanced snapshot and clone capabilities, which enable users to create point-in-time copies of data and quickly roll back to earlier versions. This is useful for HPC environments where experiments and simulations generate large amounts of data that need to be preserved. Taking advantage of the copy-on-write nature of clones allows multiple generations of research to reuse and modify the same data, while preserving shared blocks.

- Performance: OpenZFS provides excellent performance for large file systems, making it well-suited for HPC environments that require high-bandwidth and low-latency storage. OpenZFS is also an excellent backing store for distributed filesystems such as Lustre and CEPH.

- Portability: OpenZFS is open source software and has been ported to a wide range of platforms, making it a flexible and portable solution for HPC environments that use different hardware and software configurations. It doesn’t matter if your HPC cluster runs Linux, BSD, or Solaris, on x86, PowerPC, or ARMv8, OpenZFS is available on your platform, and the storage is portable between all of these platforms.

- Open Source: OpenZFS is an open source software solution, which means that it is freely available and can be customized to meet the specific needs of individual organizations. The thriving community around OpenZFS ensures that the burden of maintaining the software is well shared across a diverse group of contributors, including storage and cloud vendors, appliance and software vendors, and institutional and government users. This eliminates costs associated with licensing fees, especially per TB fees.

The Importance of Data Integrity at

Scale

Data integrity is a critical requirement for High Performance Computing (HPC) environments, because HPC applications process and generate massive amounts of data. The results of this processing are only as accurate as the inputs, and the cost of generating the data can only be recouped if the data can be used in the future. Data integrity is important in all aspects of HPC. Data must be accurate and must remain consistent throughout its entire lifecycle, from the moment it is generated, as it is stored or transferred, to the moment it is consumed to generate additional data.

HPC’s important role in scientific research, simulation, and modeling means the accuracy of the results is essential. Any errors in the data can lead to inaccurate or invalid conclusions, which can have serious consequences for scientific research, or even risk to life.

Time on HPC clusters is a scarce resource and often expensive. Any errors or inconsistencies in the input data can cause delays in processing or require the results to be thrown out and recalculated, leading to wasted time and resources. If the results are not stored securely, and cannot be retrieved, all of the invested time and resources are lost.

To ensure data integrity in HPC environments, it is important to use storage hardware and file systems that provide data protection and error detection and correction features. OpenZFS provides built-in data integrity protection through its use of checksums and triple redundancy, which helps prevent data corruption and ensures the accuracy and consistency of the data throughout its lifecycle.

The Cost of Storage in HPC Environments

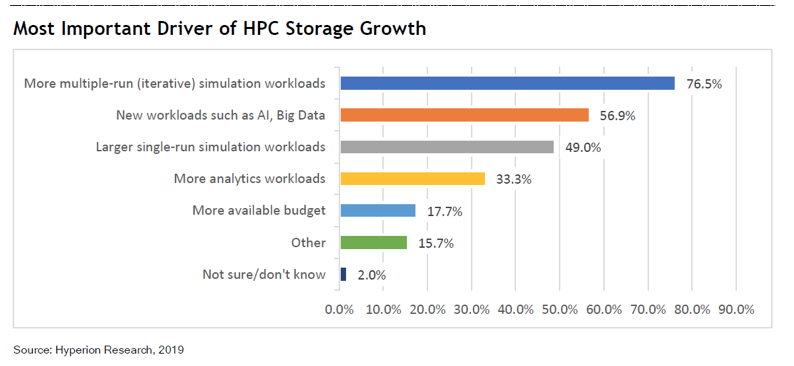

The cost per terabyte is one of the most important considerations in storage, and especially for High Performance Computing (HPC) environments because of the massive amounts of data HPC applications generate and process, often on a scale that is orders of magnitude larger than traditional enterprise applications. As a result, the cost of storage in an HPC environment can be one of the most significant expenses, especially as data volumes continue to grow year on year.

In many cases, HPC environments also require high-performance storage solutions that can handle feeding hungry applications with large volumes of data with fast access times. However, these high-performance solutions can also be expensive, which means that optimizing the cost per terabyte of storage is important for controlling costs and maximizing the return on investment in HPC environments.

ZFS provides several ways to optimize the cost per terabyte. Firstly, its open source nature means there are no per-terabyte licensing costs, and the capital investment is purely in storage capacity and capabilities. The transparent compression, zero elision, deduplication, and block sharing/cloning facilities in OpenZFS reduce overall storage requirements, making the most of the available capacity. ZFS’s Adaptive Replacement Cache (ARC) and prescient prefetch help ensure that the most frequently accessed data, as well as data that is likely to be requested soon, are cached in the fastest storage tier, RAM. Secondly, ZFS provides tiering capabilities for its cache (L2ARC) and storage (storage allocation classes), allowing metadata and small blocks that are inefficient to access from mechanical disks to be stored on a solid-state tier. Using OpenZFS as the software defined storage fabric for HPC ensures the maximum total storage capacity for the investment.

Overall, optimizing the cost per terabyte of storage is an important consideration in HPC environments, where the cost of storage can be the most significant expense. By implementing cost-effective storage solutions and optimizing the use of available storage capacity, organizations can reduce costs while still meeting the performance and capacity requirements of their applications.

OpenZFS In the Wild

OpenZFS is an integral part of many organizations' HPC infrastructure, so much so that some of those institutions have taken advantage of the open source nature of ZFS and invested in expanding its capabilities.

One of the first such efforts was when Lawrence Livermore National Laboratory (LLNL) undertook porting ZFS to Linux, to form the backbone of their Lustre distributed filesystem. They noted that OpenZFS facilitated building a storage system that could support 1 terabyte per second of data transfer at less than half the cost of any alternative filesystem.

Based on the success seen at LLNL, Los Alamos National Laboratory (LANL) started using ZFS as well. As their usage grew, they identified a need for additional functionality in OpenZFS to take advantage of modern NVMe based storage. To this end, they embarked on a ZFS improvement project that has two main components, support for DirectIO, allowing applications to bypass the Adaptive Replacement Cache (ARC) when they know data will not benefit from being cached, and the second is the ZFS Interface for Accelerators (ZIA), which allows ZFS to take advantage of computation storage hardware to accelerate compression, checksumming, and erasure coding. With these contributions to OpenZFS, LANL was able to massively improve the performance of their HPC cluster, while sharing the future maintenance cost of the improved ZFS across all of the organizations that use it.

In the latest example, just a few months ago the U.S. Department of Energy’s Oak Ridge National Laboratory announced it had built Frontier, the world’s first exascale supercomputing system and currently the fastest computer in the world, backed by Orion, the massive 700 Petabyte ZFS based file system that supports it. This impressive system contains nearly 48,000 hard drives and 5,400 NVMe devices for primary storage, and another 480 NVMe just for metadata.

Conclusions

OpenZFS provides a trusted storage foundation upon which to build massive HPC systems. With its impressive array of features for data integrity and storage optimization, it provides a high performance and low cost-per-terabyte solution for critical storage infrastructure. Choosing ZFS ensures that your organization's investment in HPC includes a high performance and truly capable storage component without stretching the budget.

Allan Jude

Principal Solutions Architect and co-Founder of Klara Inc., Allan has been a part of the FreeBSD community since 1999 (that’s more than 25 years ago!), and an active participant in the ZFS community since 2013. In his free time, he enjoys baking, and obviously a good episode of Star Trek: DS9.

Learn About KlaraGetting expert ZFS advice is as easy as reaching out to us!

At Klara, we have an entire team dedicated to helping you with your ZFS Projects. Whether you’re planning a ZFS project or are in the middle of one and need a bit of extra insight, we are here to help!