Improve the way you make use of FreeBSD in your company.

Find out more about what makes us the most reliable FreeBSD development organization, and take the next step in engaging with us for your next FreeBSD project.

FreeBSD Support FreeBSD DevelopmentAdditional Articles

Here are more interesting articles on FreeBSD that you may find useful:

- What We’ve Learned Supporting FreeBSD in Production (So You Don’t Have To)

- Maintaining FreeBSD in a Commercial Product – Why Upstream Contributions Matter

- Owning the Stack: Infrastructure Independence with FreeBSD and ZFS

- Inside FreeBSD Netgraph: Behind the Curtain of Advanced Networking

- Core Infrastructure: Why You Need to Control Your NTP

Some applications spend much of their time shuttling data between a pair of TCP connections. Common examples include proxies of various types, which connect to and exchange data with a host on behalf of a client, and load balancers, which transparently route client connections to a suitable endpoint. Such applications may need to inspect portions of the data stream, for example in the case of an HTTP proxy, but generally they blindly copy the bulk of the stream from one connection to the other.

When using classic UNIX interfaces, such applications ultimately loop over a pair of TCP sockets, reading data from one socket into a buffer and writing the buffer to the other socket. This is simple but not necessarily cheap. Consider what happens when a byte of data arrives on socket S1: the kernel will append it to a buffer of available data, at which point:

- Kernel may need to wake up an application thread, which involves selecting a CPU on which to run and potentially waiting for the CPU to become available.

- Application thread needs to call the read(2) system call, asking the kernel to copy data from the socket buffer into a user-supplied buffer.

- Application thread then needs to call the write(2) system call, asking the kernel to allocate space in the destination socket buffer and copy the data over.

- Finally, the kernel can pass the data to the TCP layer for eventual transmission.

Overheads of Traditional Proxying

This approach carries with it several fundamental costs:

- A userspace thread must be scheduled when data arrives. This may be avoided if the thread is already running, which may be the case if the application is busy or uses polling (instead of kevent(2) or similar) to reduce latency.

- The data being proxied must be copied twice, once into userspace and once out. The overhead of the second copy may be small since data being copied will likely be in the CPU cache, but this also reduces the amount of CPU cache available for other purposes.

- System calls themselves have non-zero overhead, especially when mitigations for certain speculative CPU execution vulnerabilities are enabled.

Reducing Overhead: Userspace vs. Kernel Proxying

These observations are nothing new, and today there are many ways to avoid most of these overheads, albeit using OS-specific interfaces. In particular, the second and third costs listed above are a consequence of implementing proxying in userspace when TCP is implemented in the kernel. Consequently, switching and copying data between protection domains is inherently expensive. Then, to avoid those costs, we either have to bring the TCP stack into userspace or bring proxying into the kernel.

Userspace network stacks, à la DPDK or netmap, make many network-intensive workloads much more efficient, but these frameworks generally require applications to be designed around them. If we want to take an existing socket-based TCP proxy application and make it more efficient by eliminating data copying, we may be forced to take proxying into the kernel.

SO_SPLICE: Enabling Kernel Offloading on FreeBSD

A client was interested in having kernel TCP proxying available on FreeBSD and contracted Klara to implement a solution. When a proxy application runs on embedded hardware, as is the case for this client, the performance gained by eliminating the copying and userspace-kernel transitions described earlier can be quite significant. They noted that OpenBSD has had a TCP splicing interface going way back to 2011.

How TCP Splicing Works

The idea there is to take a pair of connected TCP sockets and declare to the kernel that they are to be spliced together: all data received on one of the sockets is to be automatically forwarded to the other without any intervention by userspace. This effectively gives the kernel control of the two sockets: userspace will not "see" any of the data as it arrives on either socket. Under some conditions the socket pair may be unspliced, at which point they can be treated as normal sockets again.

Our example TCP proxy shows how to splice two sockets together using SO_SPLICE on FreeBSD: given a pair of sockets, setsockopt(2) is used to create a pair of one-way splices. To unsplice a socket, use SO_SPLICE to splice it with -1 instead of a valid socket.

Comparing SO_SPLICE to Linux and OpenBSD

Similar functionality can be had on Linux. A nice blog post by Cloudflare enumerates several general-purpose kernel interfaces that can be used to splice two sockets together with various tradeoffs. For our purposes, the OpenBSD interface was more attractive: it is simple and solves only the problem at hand, unlike, for example, Linux's splice(2), which is far more general. SO_SPLICE follows the pattern of sendfile(2) in that it was designed to serve a specific need. While a bit ugly from an interface perspective, it is much easier to implement than splice(2) and supports features useful for layer 7 proxies, namely data transfer limits and timeouts, described further below.

Finally, implementing OpenBSD's interface means that we can enable splicing in relayd without any extra effort. relayd is a popular load balancer and proxy server which originates from OpenBSD and has an active FreeBSD port.

Implementing SO_SPLICE

Configuration and Setup

The implementation of SO_SPLICE broadly consists of two pieces:

- Configuration bits which allow one to create or destroy a splice using setsockopt(2).

- Data path which hands data from one end of a splice to the other.

The configuration interface is exposed via setsockopt(2), where the SO_SPLICE option handler is implemented. This handler looks up the socket referenced by the sp_fd field of the argument, verifies that both sockets possess the necessary Capsicum rights, and then forwards them to the so_splice() function. Additionally, it invokes splice_init() for one-time initialization related to SO_SPLICE, specifically setting up a pool of threads responsible for transferring data between socket buffers and into the network stack.

Handling the Splice State

so_splice() in turn sets up a struct so_splice, which holds state related to the splice, including two back-pointers to the sockets that are spliced together. Recall that splices are one-way – to exchange data symmetrically between two TCP sockets, userspace needs to invoke setsockopt(SO_SPLICE) twice, once for each direction. In this case, we end up with two struct so_splice instances. Thus, in the context of a single splice, one socket is the "source", and the other is the "sink": data which arrives in the receive buffer of the source socket is moved to the send buffer of the sink socket. so_splice() is also responsible for assigning a worker thread to the splice.

User Interaction and System Call Behavior

so_splice() does little except allocate a per-splice state structure and mark the two sockets as spliced together. Once in this state, userspace can interact with the sockets as before, but the select(2), poll(2), and kevent(2) system calls will not return the spliced sockets as readable. The intent is that userspace should block waiting for an event to occur on the spliced socket. This can arise if the connection is closed, or the splice is configured to end after a certain amount of data has been transferred, or a timeout has elapsed.

Data Transfer and Unsplicing

Once a socket is spliced, protocol layers (i.e., TCP) append to the source's receive buffer normally. When data is appended, rather than waking up userspace, the socket layer wakes up a thread from the thread pool.

Splice worker threads spend most of their time executing so_splice_xfer(), which uses various internal socket functions to move chains of packet data from the source's receive buffer to the sink's send buffer. When it is no longer possible to move data, for example because the connection is closed, the splice thread handles unsplicing the sockets, at which point the sockets are returned to normal operation.

Evaluating the Performance of SO_SPLICE

Because SO_SPLICE lets the kernel avoid a bunch of copying and system calls, we should expect to see some performance improvements in at least two scenarios:

- Bulk data transfers, where a traditional proxy would have to do a lot of copying. In particular, we would like to see a reduction in CPU usage on the system running the proxy, and possibly an increase in throughput.

- Data transfer latency. A reduction in latency may be observable with a "ping-pong" test, where a client sends a small amount of data to a server and receives a short response. The client then measures the time elapsed between sending its message and receiving the server's reply.

Measuring Bulk Data Transfer Efficiency

To evaluate the first metric, we implemented a simple TCP proxy server. It can be configured to either copy data through userspace, or to use SO_SPLICE to bridge two TCPconnections. To generate bulk data, we use iperf3, a common TCP benchmarking tool.

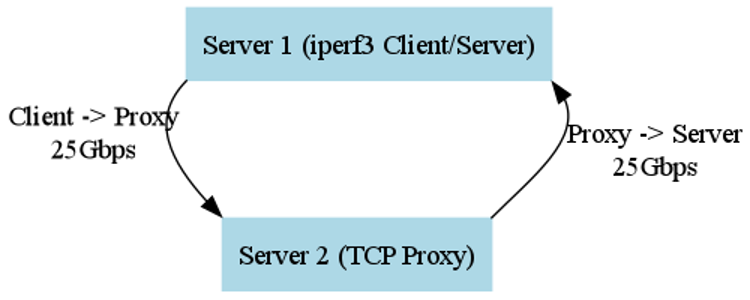

In our benchmark setup, we ran two 80-core Ampere Altra systems connected by a 25Gbps link. Because we only have the two systems, we run two iperf3 instances on the first system and route all data through the proxy running on the second machine, all over the same full-duplex link:

The diagram illustrates the test environment, where iperf3 runs on Server 1, sending and receiving data through a TCP proxy on Server 2. The proxy routes traffic between the client and server over a 25Gbps link to measure the impact of SO_SPLICE on performance.

To get a rough idea of the CPU usage, we ran:

# iperf3 -c <proxy address> -p <proxy port> -P 4

twice, once with the proxy running in splice mode, and once with the proxy running in userspace. With four parallel TCP streams, the link is saturated in both modes, i.e., the same amount of data is being transferred.

Using vmstat 1 to monitor CPU usage, we get the following:

SO_SPLICE proxying:

procs memory page disks faults cpu

r b w avm fre flt re pi po fr sr nda0 pas0 in sy cs us sy id

0 0 0 1.0T 122G 0 0 0 0 0 30 0 0 307 131 703 0 0 100

0 0 0 1.0T 122G 10 0 0 0 0 30 0 0 361k 201 782k 0 3 96

0 0 0 1.0T 122G 356 0 0 0 72 30 0 0 480k 767 1.0M 0 4 95

0 0 0 1.0T 122G 3.1k 0 0 0 12 30 0 0 478k 383 1.0M 0 4 95

0 0 0 1.0T 122G 610 0 0 0 3 30 0 0 479k 184 1.0M 0 3 96

0 0 0 1.0T 122G 608 0 0 0 3 30 0 0 478k 167 1.0M 0 3 96

0 0 0 1.0T 122G 608 0 0 0 3 30 0 0 478k 167 1.0M 0 4 95

0 0 0 1.0T 122G 609 0 0 0 3 30 0 0 480k 167 1.0M 0 3 95

0 0 0 1.0T 122G 609 0 0 0 3 30 0 0 480k 167 1.0M 0 3 96

0 0 0 1.0T 122G 609 0 0 0 3 30 0 0 479k 167 1.0M 0 4 95

0 0 0 1.0T 122G 623 0 0 0 153 30 0 0 342k 202 743k 0 2 97

userspace proxying:

procs memory page disks faults cpu

r b w avm fre flt re pi po fr sr nda0 pas0 in sy cs us sy id

0 0 0 1.0T 122G 0 0 0 0 0 30 0 0 306 131 682 0 0 100

4 0 0 1.0T 122G 2.7k 0 0 0 15 30 0 0 177k 90k 361k 0 3 96

3 0 0 1.0T 122G 0 0 0 0 0 30 0 0 330k 180k 682k 0 6 93

4 0 0 1.0T 122G 0 0 0 0 0 30 0 0 370k 180k 764k 0 6 93

4 0 0 1.0T 122G 0 0 0 0 0 30 0 0 371k 180k 766k 0 6 93

4 0 0 1.0T 122G 0 0 0 0 0 30 0 0 372k 180k 766k 0 6 93

4 0 0 1.0T 122G 0 0 0 0 0 30 0 0 371k 180k 767k 0 6 93

4 0 0 1.0T 122G 0 0 0 0 0 30 0 0 369k 180k 762k 0 6 93

3 0 0 1.0T 122G 0 0 0 0 0 30 0 0 371k 180k 765k 0 6 93

4 0 0 1.0T 122G 0 0 0 0 0 30 0 0 370k 180k 763k 0 6 93

4 0 0 1.0T 122G 4 0 0 0 713 30 0 0 354k 172k 730k 0 5 94

The three columns on the right show system CPU usage on the proxy host for the ten-second window where iperf3 is running on the other host. A couple of observations are immediately visible:

- CPU usage when using SO_SPLICE is lower, as hoped. The system has a couple of extra percentage points of idle CPU available, which might not sound like a lot. But consider that the host has 80 cores – a single core represents 1.25% of the system's CPU capacity.

- Even when proxying in userspace, virtually all of the system's CPU time is spent in the kernel. This might be a bit surprising at first, but it makes sense: the proxy is doing nothing but read(2) into a buffer and passing that same buffer to write(2), so all the copying happens in the kernel.

Impact on Memory Bandwidth and TCP Throughput

Aside from reducing CPU usage, the elimination of copying should also save memory bandwidth on the system. While memory bandwidth is not often a bottleneck on modern server systems, it can be a limiting factor in some very I/O-heavy workloads.

Single-stream TCP throughput is also improved by making the proxy use SO_SPLICE. In particular, when proxying in userspace, iperf3 reports a throughput of 7.6Gbps, and the proxy process is consuming 100% of a CPU. Switching to SO_SPLICE increases the throughput to roughly 9.6Gbps. So, by using SO_SPLICE, we can process TCP streams more efficiently and make better use of available bandwidth.

Evaluating Latency Reduction

To evaluate the impact of SO_SPLICE on latency, we wrote a little ping-pong application which displays the time elapsed between sending a short message over TCP and receiving a response. Running this application through the proxy server would hopefully show a reduction in latency when using SO_SPLICE. This is because in that case the proxy avoids processing each message, and no system calls are needed.

Benchmark Setup and Testing Conditions

pingpong can run in client or server mode. In server mode, it accepts new connections and responds to messages with an equal-length message. In client mode, clock_gettime(CLOCK_MONOTONIC) is used to measure the time elapsed from sending a message to receiving a response from the server. This is done a configurable number of times on the same connection, and the first time is discarded.

System calls are very cheap, and we do not expect to see a large reduction in latency when using SO_SPLICE. To try and make the effect more visible, we ran this test with all relevant threads pinned to the same CPU on a single host, this time using an AMD 7950X3D. In particular, the splice worker threads must be pinned using cpuset -l <core ID> -p <splice worker process>, ditto the netisr thread(s) which handle loopback TCP traffic. We additionally run a low-priority busy loop in the background on the same CPU. This is an attempt to ensure that CPU remains at a consistent performance level and to discourage the scheduler from running unrelated threads on the target CPU, as FreeBSD does not have proper CPU isolation facilities.

Note: Without this, there is a bimodal distribution of latencies in both copy and splice modes.

Results and Observations

When ping-ponging through the proxy in copy and splice modes, we indeed see a small but consistent reduction in latency, here displayed (in microseconds) by the venerable ministat(1) utility.

$ ministat ~/tmp/copy.txt ~/tmp/splice.txt

x /home/markj/tmp/copy.txt

+ /home/markj/tmp/splice.txt

+-----------------------------------------------------------------------------+

| ++ ++ xx x |

| ++++++++ ++ xxxx x x |

| ++++++++ +++ + + + xxxxxxxxxx x +xx x + x|

||________M____A_____________| |___M_A______| |

+-----------------------------------------------------------------------------+

N Min Max Median Avg Stddev

x 30 10.29 11.33 10.45 10.514 0.20685827

+ 30 9.03 10.96 9.2 9.3563333 0.44852619

Difference at 95.0% confidence

-1.15767 +/- 0.180538

-11.0107% +/- 1.68549%

(Student's t, pooled s = 0.349261)

Future Directions and Availability of SO_SPLICE

The SO_SPLICE feature has landed in FreeBSD and will be available out-of-the-box in 14.2-RELEASE. The net/relayd port has also been updated to make use of it by default in the upcoming FreeBSD 15.0release. The benchmarks described above give us some confidence that SO_SPLICE is, at the very least, no worse than userspace proxying on server hardware.

FreeBSD's SO_SPLICE implementation currently only supports TCP sockets, while on OpenBSD it also supports UDP. As we did not have any immediate requirements for UDP support, it has been left unimplemented but could be added in the future if the need arises.

One other potential future enhancement will be to extend SO_SPLICE to support KTLS, FreeBSD's kernel TLS implementation. This would enable the use of SO_SPLICE in TLS relays which make use of KTLS. Certain parts of the TLS protocol must be handled in userspace. However, bulk data transfers may be offloaded to the kernel, similar to how sendfile(2) enables file transfers over TLS without copying data in and out of userspace.

Klara Inc. continues to contribute development and performance optimizations to FreeBSD—if your organization is looking to integrate SO_SPLICE, would like to investigate KTLS support or otherwise optimize network performance, our team can provide development expertice and support.

Mark Johnston

Mark is a FreeBSD kernel developer living in Toronto, Canada. He's been a part of the FreeBSD open source community for almost a decade now, and a user and active contributor of FreeBSD since 2012. He's especially interested in improving the security and robustness of the FreeBSD kernel.

In his spare time, he enjoys running and practicing playing the cello.

Learn About Klara